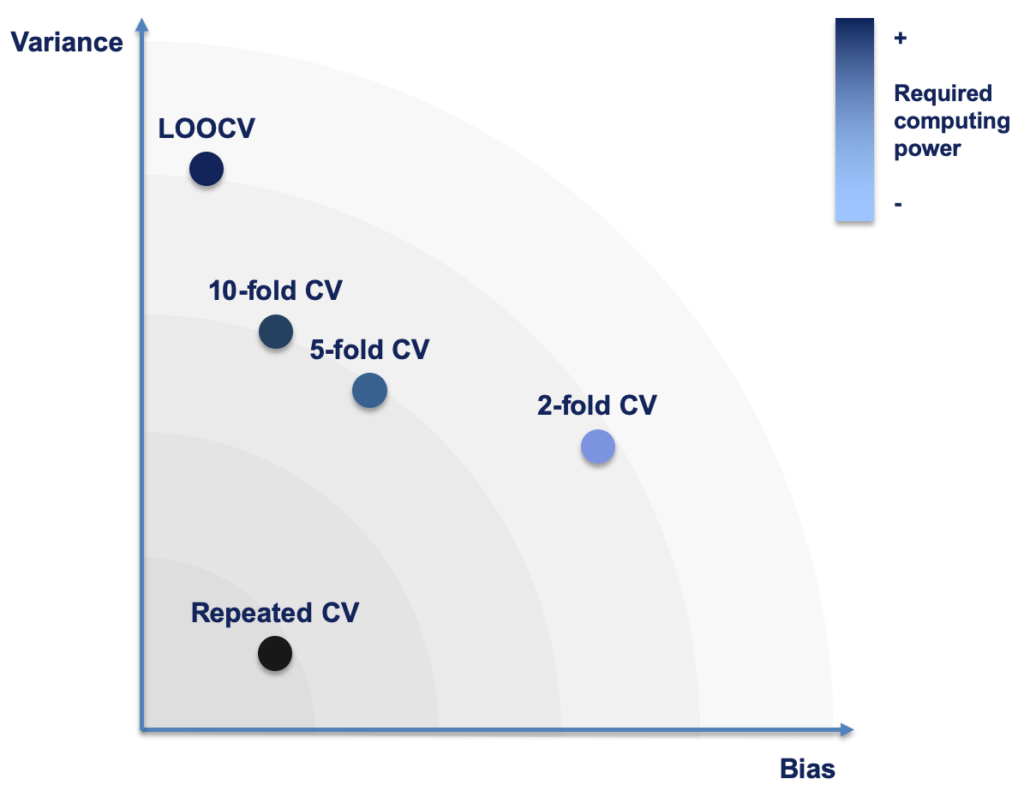

Properties. the measured data are used to describe a model where each layer refers to a given ma-terial. the model uses mathematical relations called dispersion formulae that help to evaluate the thickness and optical properties of the material by adjusting specific fit parameters. this application note deals with the lorentzian dispersion formula. May 03, 2018 · to return the model’s bias, we take the average of all the errors. lower the average value, better the model. similarly for calculating the model variance, we take standard deviation of all the errors. a low value of standard deviation suggests our model does not vary a lot with different subsets of training data. Evaluate model performance now that you have both the actual and predicted values of each fold you can compare them to measure performance. for this regression model, you will measure the mean absolute error (mae) between these two vectors. this value tells you the average difference between the actual and predicted values. Jul 21, 2017 it can be calculated easily by dividing the number of correct predictions by the number of total predictions. accuracy .

Evaluate regression model performance for an overview of related r-functions used by radiant to evaluate regression models see model > evaluate . Model fitting is technically quite similar across the modeling methods that exist in r. most methods take a formula r evaluate model identifying the dependent and independent .

Biomod2 / r / evaluate. models. r go to file go to file t; go to line l; copy path copy permalink. cannot retrieve contributors at this time. 365 lines (316 sloc) 11. 9 kb.

The “smart objectives template” can guide you through the steps needed to define goals and smart objectives. innovation tracking the cop environment is proving to be a fertile one for generating new ideas and products, improving existing ones, and disseminating what is learned to improve the practice of public health. The dataset we will use to train models. the test options used to evaluate a model (e. g. resampling method). the metric we are interested in measuring and comparing. test dataset. the dataset we use to spot check algorithms should be representative of our problem, but it does not have to be all of our data. spot checking algorithms must be fast. There are two ways to evaluate the model on the training data. the first is to set the data argument to the same data frame used to train the model. the second is to use the on_training = true argument. these are equivalent unless there is r evaluate model some random component among the explanatory terms, as with `mosaic::rand`, `mosaic::shuffle` and so on. Evaluate: model evaluation. description. cross-validation of models with presence/absence data. given a vector of presence and a vector of absence values .

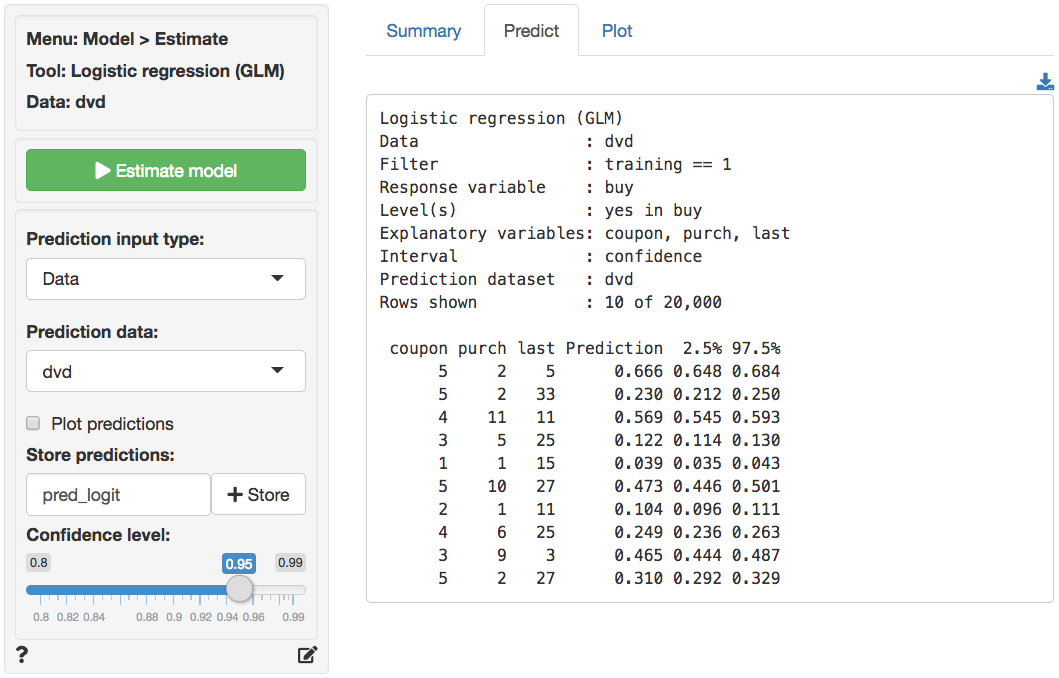

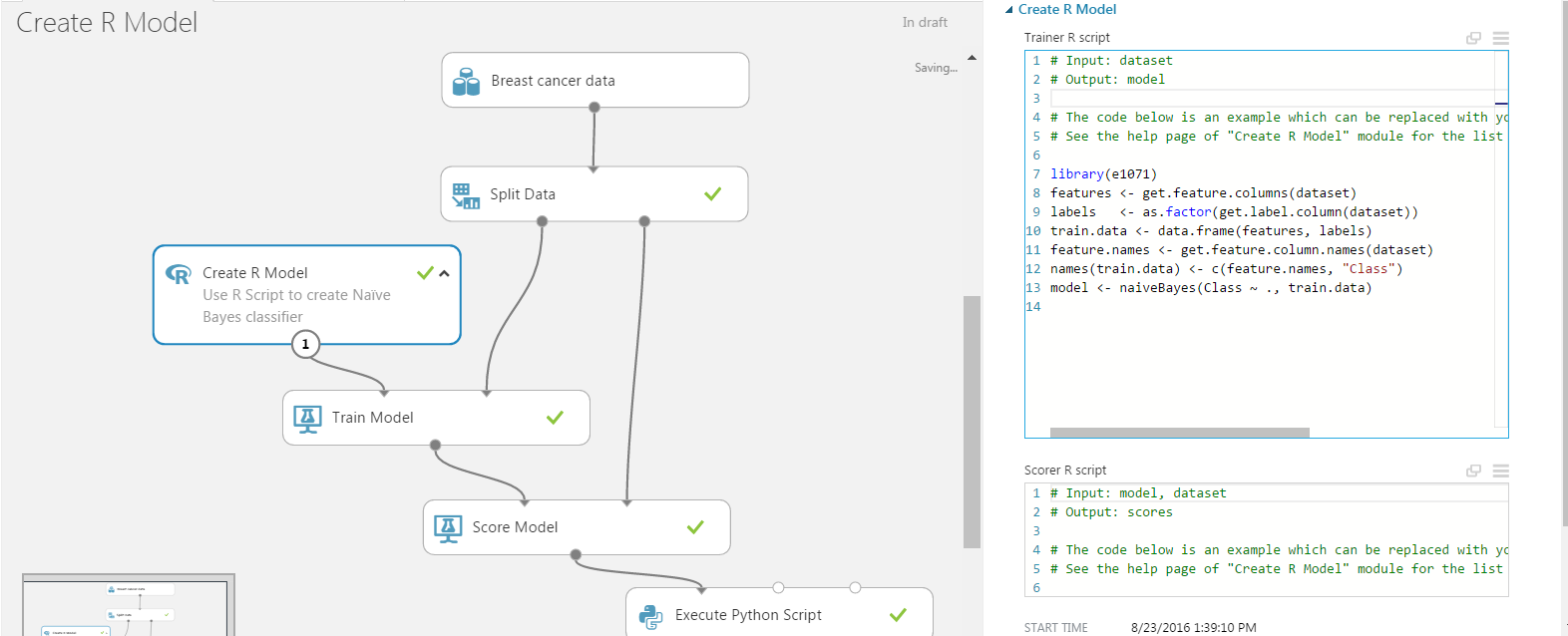

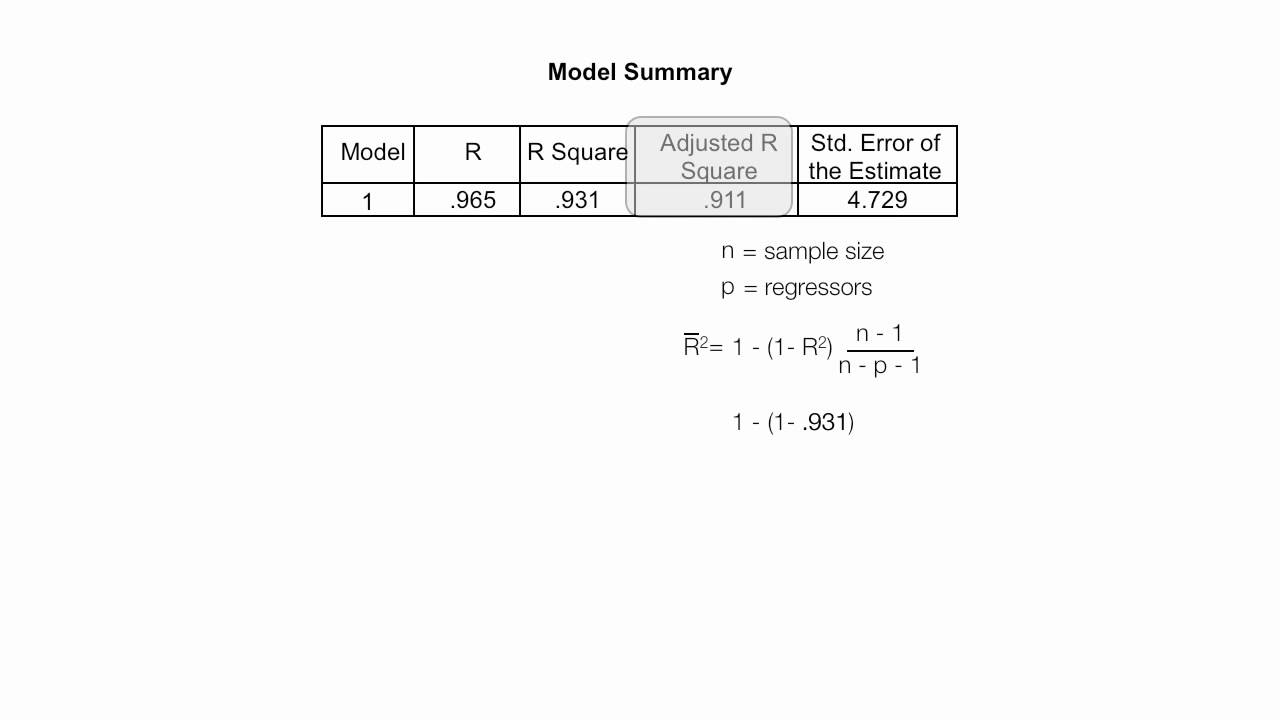

We also introduced workflows as a way to bundle a parsnip model and recipe cran (r 4. 0. 2) evaluate 0. 14 2019-05-28 [1] cran (r 4. 0. 0) fansi 0. 4. 2 . 6. this question is really quite broad and should be focused a bit, but here's a small subset of functions written to work with linear models: x Dec 08, 2020 · the mean squared error, mean absolute error, root mean squared error, and r-squared or coefficient of determination metrics are used to evaluate the performance of the model in regression analysis. May 23, 2020 model evaluation, r square, adjusted r square, mse, rmse, mae. Even if your r-squared values had a greater difference between them, it’s not a good practice to evaluate models solely by goodness-of-fit measures, such as r-squared, akaike, etc. also, if your models have different numbers of predictors, you should be looking at adjusted r-squared and not the regular r-squared. The eval function evaluates an expression, but "5+5" is a string, not an expression. use parse with text= Read customer reviews & find best sellers. free 2-day shipping w/amazon prime. Model evaluation and cross-validation basics. cross-validation is a model evaluation technique. the central intuition behind model evaluation is to figure out if the trained model is generalizable, that is, whether the predictive power we observe while training is also to be expected on unseen data. The lumped-element model (also called lumped-parameter model, or lumped-component model) simplifies the description of the behaviour of spatially distributed physical systems into a topology consisting of discrete entities that approximate the behaviour of the distributed system under certain assumptions. To evaluate the overall fit of a linear model, we use the r-squared value r-squared is the proportion of variance explained it r evaluate model is the proportion of variance in the observed data that is explained by the model, or the reduction in error over the null model. The model was estimated using model > logistic regression (glm). the predictions shown below were generated in the predict tab. r-functions. for an overview of . This post will explore using r’s mlmetrics to evaluate machine learning models. mlmetrics provides several functions to calculate common metrics for ml models, including auc, precision, recall, accuracy, etc. building an example model firstly, we need to build a model to use as an example. Oct 22, 2020 · r-bert (unofficial) pytorch implementation of r-bert: enriching pre-trained language model with entity information for relation classification. model architecture. method. get three vectors from bert. As you can see now, r² is a metric to compare your model with a very simple mean model that returns the average of the target values every time irrespective of input data. the comparison has 4 cases: case 1: ss_r = 0 (r² = 1) perfect model with no errors at all. case 2: ss_r > ss_t (r² < 0) model is even worse than the simple mean model. Jun 24, 2021 · model to evaluate ecosystem services by integrating ecosystem processes and remote sensing data by chinese academy of sciences schematic representation of the cevsa-es model.

Shop amazon fire tablets.

Evaluate

Github Monologgrbert Pytorch Implementation Of Rbert

0 Response to "R Evaluate Model"

Posting Komentar